As another side project i am currently trying to convert a letter into computer readable text just from a scanned image.

The original images which will be preprocessed and passed to a convolutional neural network are scanned A4 letters with a resolution of about 2550x3500 pixels at 300dpi.

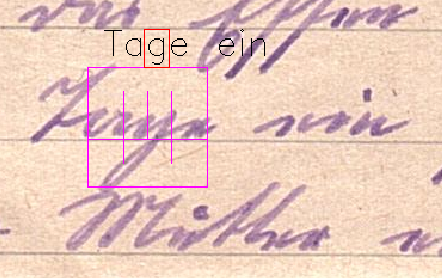

At first some letters will be manually converted into text for labeling, which is required for the training process. A self written tool is used to assign a position to each character in the transcription. This process can be seen in the screenshot above. The tool was written with OpenCV and allows a preview of the next two words, highlights the current letter which is to be labeled and shows some bounding box with hints for the later cropping process. (If you want the source just write me some message but i also plan to release the project after some results)

After each letter has some assigned position, the image still needs to be preprocessed/enhanced as the color of the font is quite close to the background color. This step can be done automatically in the future. For each labeled character a 32x32px sub image will be cropped and additionally for each of those images small pixel translations, scaling and sinus wave transformations will be applied to generate a lot more training data for the learning process.

For the CNN i planned to use Tensorflow as it has been proven to be quite flexible for this task.

I will present the network configuration and further results in the next blog posts :)

Current letter counts after labeling two letters:

16 ,

2 !

24 .

2 0

4 1

3 2

1 3

2 4

1 9

71 a

3 ä

22 b

54 c

33 d

162 e

17 f

26 g

76 h

81 i

5 j

13 k

41 l

22 m

100 n

26 o

2 ö

5 p

65 r

48 s

4 ß

57 t

22 u

4 ü

1 Ü

7 v

31 w

13 z